Beyond the Hype: Rediscovering the Value of Relational Data Models

The Practical Benefits of Database Constraints in Production Systems

The last few years have been busy scorning traditional relational databases and data models. But why, actually? Don't they have plenty of strengths? If you look at current trends, everyone seems to say, "Use a single detached table, skip relations." Flat tables can be appealing because they're simple to read and fast to query. Command Query Responsibility Segregation (CQRS) is built on that idea too: the tradeoff is extra data copies for quick reads. I tried CQRS for the first time in 2013. It felt like a superpower. But there was a price. All those event handlers and separate write models take time and energy to maintain.

Of course, CQRS isn't the only pattern that nudges us away from a relational data model. People say, "Store data per use case." Sometimes that's a table in SQL, or maybe MongoDB, or even just a file in S3. This is called polyglot persistence. But why do we jump in that direction? Because it's trendy? Then, before we notice, we've created a mess of mismatched data. Relational databases shine for a reason: consistency and integrity. We shouldn't ignore them. They bring real benefits.

Interestingly, some large enterprises that initially embraced polyglot persistence or event sourcing have found themselves reverting to traditional relational models to reduce complexity and regain data consistency. For instance, The Guardian switched from MongoDB to PostgreSQL to simplify operations and cut costs, noting that managing Mongo clusters was more expensive and cumbersome than relying on a robust relational engine. Another example is Olery, a SaaS provider that retired MongoDB in favor of PostgreSQL after facing ongoing issues with performance and schema drift, discovering in the end that a relational schema with ACID transactions was far easier to maintain.

Let’s make it more concrete. Imagine you manage an online store. You have a products table, a categories table, and maybe an orders table. If you're not careful with relations, you could delete a category but still have products pointing to it. You might miss an update to a product name in one place, yet keep the old name in another. That's inconsistent data. A proper relational model can stop that from happening by linking tables with foreign keys and enforcing referential integrity. It goes beyond just storing data. It protects the meaning of the data.

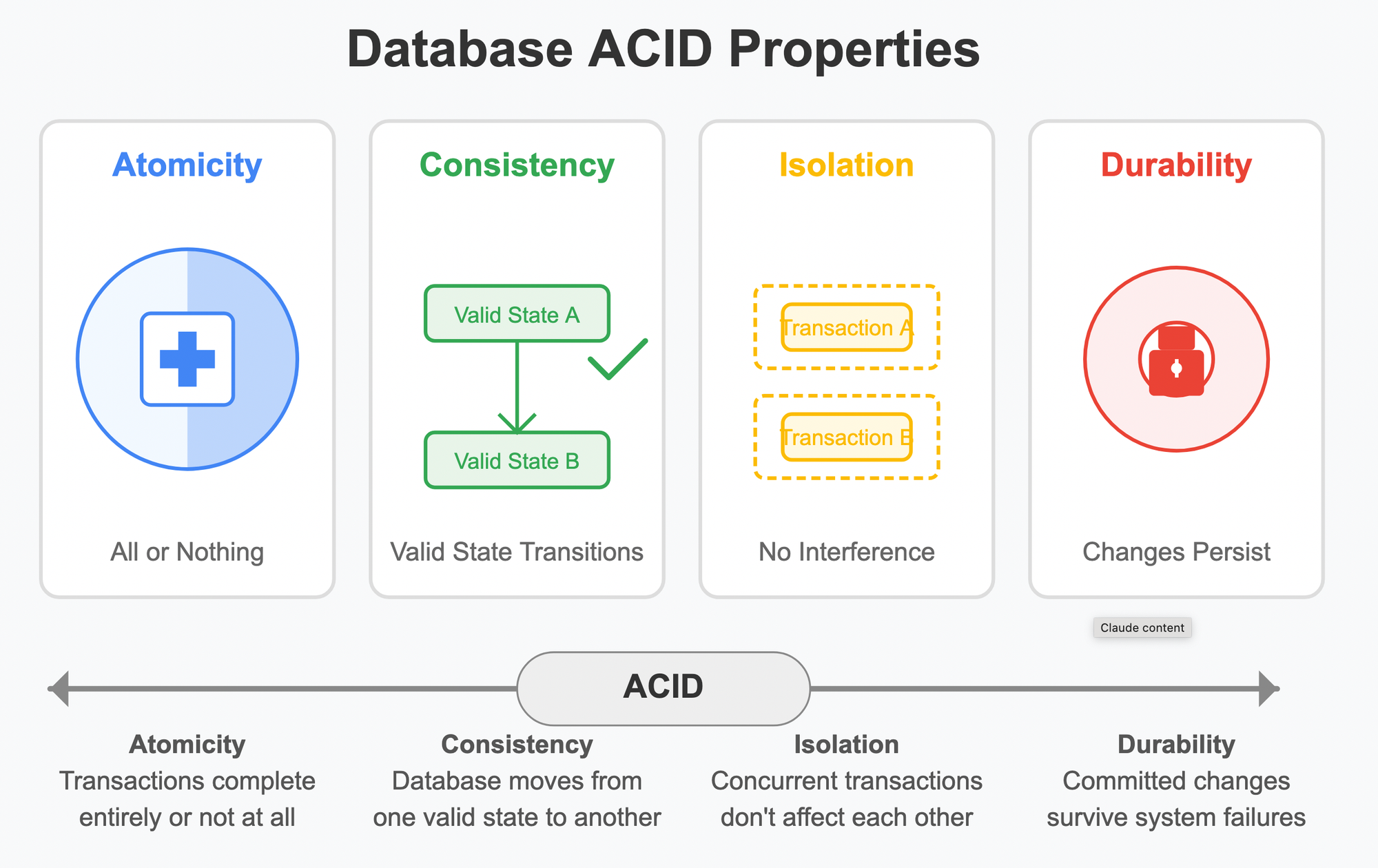

Another reason consistency matters is when multiple processes try to change the same data at once. In a relational database, transactions help keep the system stable. They ensure you don't end up with half-updated rows or confusing race conditions. It sounds like a small detail until you realize that a mismatch can break your entire logic. You can avoid those headaches with solid constraints, properly designed relations, and the safety net of ACID transactions. ACID stands for Atomicity, Consistency, Isolation, Durability. These are key properties that ensure data integrity in relational systems.

Don't let the hype with all this buzzwords like polyglot persistence fool you into throwing out the strong foundation that relational databases provide. Consistency and integrity aren't buzzwords. They're insurance. They protect data from turning into chaos over time, and that adds real value.

Referential Integrity

Referential integrity is one of the key strengths of a relational database. By linking tables with foreign keys, we ensure that references between them always stay valid. Then come the cascades. They tell the database what to do if a parent record is changed or deleted. ON DELETE CASCADE might remove all child records automatically when the parent goes away, while ON DELETE RESTRICT stops you from removing a parent if children still exist. Sounds straightforward, but you have to set these rules with care.

Imagine you have a users table linked to addresses. If you casually set DELETE CASCADE, you might wipe out addresses the second you remove a user. If that's exactly what you want, fine. But if it means losing valuable data in the process, it's a nightmare. On the flip side, RESTRICT could leave you stuck with a user you can’t delete because you forgot to handle old addresses. So it’s really about understanding your domain and choosing the appropriate option.

Another point is that foreign keys are more than just references. They stop us from inserting nonsense, like an address that points to a user who doesn’t exist at all. That alone can save you a bunch of headaches when you realize how quickly data can spin out of control without these checks. The database effectively enforces your business rules for you, so you don’t need to write custom scripts or rely on application logic to keep everything in sync.

That’s what makes relational databases so solid. They’re not just about storing rows and columns. They’re about ensuring consistency and integrity at the core. Modern solutions sometimes skip these constraints and move that logic elsewhere, but that often leads to confusion later. We should remember why foreign keys and cascades are there in the first place. They protect us from chaos and give us a stable platform for everything else we build.

Consistency

At its core, it means the data we store actually matches the data we expect. In a relational database, that’s usually guaranteed by ACID transactions. With them, you can start a transaction, make your changes, and either commit everything or roll it back if something goes wrong. No half-updated records. No race conditions that corrupt your data. That might sound obvious, but in systems without these safeguards, you can easily end up with incomplete or conflicting data. And if that happens, cleaning it up isn’t fun.

Think of an online booking system. Two people can’t book the same seat on a plane. Without consistent transactions, it’s easy to imagine multiple processes updating the same seat at the same time. One might “win,” but then the other sneaks in a second later. Now you’ve got a seat double-booked, and a passenger who’s unhappy. With proper transaction isolation, one update waits for the other to finish. Either the seat is free, or it’s taken. Clear and consistent.

It’s not just about concurrency, though. The database also helps you maintain consistent rules across the whole schema. You might forbid empty names or require certain fields to match patterns. These checks are part of consistency too. They ensure the data you store won’t break your application later. Yes, you can manage those rules on the application side, but having them baked into the database means fewer surprises if someone accidentally slips in a manual update or tries to bypass validations.

All of that ties back to why a relational model is still so appealing. It’s not just about storing data. It’s about ensuring the data is accurate and coherent, even when lots of operations happen at once. That reliability is a big reason why so many core systems rely on relational databases, even when newer or more specialized tools appear. If we ignore consistency, we open the door to a world of confusing data states and manual fixes. A well-built relational schema provides a safety net that just makes sense.

My Return to a Real Data Model

I’ve decided to go back to using a relational data model in my large projects because storing and managing data may be technically separated from processes, but it’s still part of the domain. If I don’t treat that with respect, I’ll eventually pay for it when the data becomes inconsistent. Consistency, integrity, and solid performance are all benefits of a careful relational design.

I prefer using CQS (Command Query Separation) as a more natural approach of splitting read and write operations without necessarily employing distinct data stores. Full-blown CQRS in contrast involves event sourcing and projections and separate databases (EventStore + Read Models).

-- Create the `tenant` schema

CREATE SCHEMA IF NOT EXISTS tenant;

CREATE TABLE tenant.owners (

id UUID PRIMARY KEY NOT NULL,

tenant_id UUID UNIQUE NOT NULL REFERENCES tenant.tenants(id) ON DELETE CASCADE,

email VARCHAR(250) UNIQUE NOT NULL,

external_user_id VARCHAR(250) NULL,

created_at TIMESTAMP DEFAULT NOW()

);

ALTER TABLE tenant.owners ENABLE ROW LEVEL SECURITY;

Practically speaking, I create SQL scripts and manage them from the application side using DbUp. It’s a simple, lean approach. No mystical layers that hide what’s going on. On top of that, I organize my code around self-contained features. I don’t rely on a central, massive data model that an ORM might impose. Instead, I use a SQL provider and Dapper for queries to keep things clear and efficient. Dapper helps turn raw SQL results into objects without too much hassle, but it doesn’t force a global model on me.

Feature Slices and Clear Boundaries

Each feature in my application has its own slice of the domain. That slice defines the data needed to handle a particular request or command. Maybe that’s a “request model” or a “command model.” Either way, the business rules live right inside the feature. This design keeps coherence high and dependencies low. I keep my SQL inside the command handler but separate out the actual database execution logic so I can test without tying everything to a live database. It’s straightforward, and it exposes the data model as part of the domain. Because let’s face it: without data, there wouldn’t be much of a system at all.

SQL is expressive. What should be the reason to hide this?

Here an example how a command handler can be easy to understand, lean and expressive.

public sealed class UpdateAssetCommandHandler(IDatabaseExecutor dbExecutor)

: IRequestHandler<UpdateAssetCommand, Result<UpdateAssetResponse>>

{ public async Task<Result<UpdateAssetResponse>> Handle(UpdateAssetCommand request, CancellationToken ct)

{

// Check if asset exists and belongs to tenant

const string checkExistsSql = """

SELECT COUNT(1)

FROM tracking.assets

WHERE id = @Id AND tenant_id = @TenantId

""";

var existsResult = await dbExecutor.QueryAsync<int>(checkExistsSql, new { request.Id, request.TenantId });

if (!existsResult.IsSuccess)

return Result<UpdateAssetResponse>.Failure(existsResult.Errors);

if (existsResult.Value == 0)

return Result<UpdateAssetResponse>.Failure(["Asset not found"]);

// Check name uniqueness

const string checkNameSql = """

SELECT COUNT(1)

FROM tracking.assets

WHERE tenant_id = @TenantId

AND name = @Name

AND id != @Id

AND is_retired = false

""";

var nameResult = await dbExecutor.QueryAsync<int>(checkNameSql, new { request.TenantId, request.Name, request.Id });

if (!nameResult.IsSuccess)

return Result<UpdateAssetResponse>.Failure(nameResult.Errors);

if (nameResult.Value > 0)

return Result<UpdateAssetResponse>.Failure(["Asset name already in use by another active asset"]);

// Update asset

const string updateSql = """

UPDATE tracking.assets

SET name = @Name,

description = @Description,

attributes = @Attributes::jsonb,

updated_at = NOW()

WHERE id = @Id AND tenant_id = @TenantId

RETURNING id, name, description, attributes

""";

var updateResult = await dbExecutor.QueryAsync<UpdateAssetResponse>(

updateSql,

new

{

request.Id,

request.TenantId,

request.Name,

request.Description,

Attributes = request.Attributes != null ? JsonSerializer.Serialize(request.Attributes) : null

}

);

return updateResult is { IsSuccess: true, Value: not null }

? Result<UpdateAssetResponse>.Success(updateResult.Value)

: Result<UpdateAssetResponse>.Failure(updateResult.Errors);

}

}

On the query side, I do something similar. Each query feature has its own representation of the data. I don’t need a shared, global model. I only fetch what I require (maybe columnA and columnB from tableA) to build a User object that fits the current scenario. In another query, a User might look different because I only need some of its fields. That keeps each feature tight, expressive, and free of clutter.

public sealed record User(Guid Id, string Name);

// ...

string sql = "SELECT id, name FROM users";

var results = await conn.QueryAsync<User>(sql);

This avoids things like DTO suffixes or strange artificial class names to distinguish different types in a large model we had in earlier years, like UserInfo to indicate its not the full user model and so on.

When Polyglot Persistence Backfires: A Real-World Example

A good example of why consistency matters comes from Olery, a hospitality analytics startup. They initially embraced polyglot persistence by mixing MySQL for transactional data with MongoDB for large, unstructured review data. Over time, however, they found themselves facing severe performance issues and unpredictable lock-ups. Schema drift (IMO a natural consequence of MongoDB’s flexibility) eventually forced them into constant schema checks within their application code, making data consistency fragile and difficult to manage. After significant struggles with debugging and operational complexity, they decided to consolidate everything into PostgreSQL. The transition was challenging, involving careful schema redesign and data migration, but the benefits were immediate: dramatically improved performance, reduced complexity, and consistent data enforced at the database level. Olery's experience is a strong reminder that trendy approaches might seem appealing initially, but over time, the clarity and stability of a well-structured relational model often proves more valuable.

CQRS Isn't a Free Lunch

CQRS isn’t inherently good or bad. It’s just a tool. Even Martin Fowler, who helped popularize it, warns against using CQRS everywhere. According to Fowler, introducing CQRS into a domain that doesn’t specifically require it "adds complexity, reducing productivity and increasing risk." That aligns perfectly with my own view: CQRS is helpful when you genuinely need separate read and write models for scale or complexity, but in most cases, I rely on relational databases for their consistency, integrity, and simplicity.

I still enjoy CQRS for carefully chosen scenarios, but it’s always about keeping complexity manageable. My approach is straightforward: a well-designed relational model provides consistency, integrity, and clear data management, reducing the need for complex data synchronization or duplicated logic.

Conclusion

Relational databases sometimes get criticized for requiring upfront schema design, but that's also their strength. Polyglot persistence or complex event-driven architectures often introduce hidden complexity, something teams like The Guardian and Olery found out firsthand. Both companies initially adopted MongoDB for flexibility, but later reverted to relational databases like PostgreSQL due to operational simplicity and schema integrity. Similarly, DNA Technology stepped back from event sourcing to a relational database to significantly reduce complexity. This reminds us that while new techniques promise flexibility, traditional relational models often deliver greater simplicity and robustness over time, especially when maintaining the integrity and consistency of core business data.

Cheers!