How to Enforce Consistency Without Aggregates in Event-Sourced Systems

A Practical Approach Using Context-Scoped Filters and SQL

Consistency is essential. However, the way in which it is enforced is more important than most developers realize.

In traditional event-sourced systems, consistency is tied to the aggregate. You replay past events, to restore the aggregate state, make a decision and then try to append new events, but only if the version of the aggregate hasn’t changed. This approach is safe, but also coarse. It assumes that any change to the aggregate renders every command unsafe.

Today, however, I think differently. I’ve shifted the consistency boundary to the command context. I no longer assume that a central state object needs to enforce consistency. Instead, I now enforce consistency per command, relative to the event context that the command actually reads.

This might sound like a small difference, but it fundamentally changes how you design and evolve behavior. You stop coupling unrelated commands just because they operate on the same entity. You gain flexibility, clarity and fewer unnecessary conflicts.

In this article, I’ll explain the technicalities step by step. This will include the exact interface, logic and SQL query that we use in our PostgreSQL event store implementation.

How Aggregate Versioning Works (And Why It’s Too Broad)

In most event-sourced systems, consistency is enforced using aggregate versioning. The idea is simple: every time you apply a command, you load the aggregate’s event history, make a decision, and try to append new events but only if no other events have been added in the meantime.

This is usually implemented by tracking a version number (or the highest sequence number) of the last event. When appending, the system checks if that version still matches. If not, the command fails and must be retried.

This works. But it’s a coarse-grained guarantee.

Let’s say two commands both target the same InventoryItem aggregate:

- CheckOutInventory relies on past check-ins and audits.

- RenameItem changes the display name of the item.

They don’t care about each other. But because they touch the same aggregate, they block each other. A rename operation that has nothing to do with stock levels can make a valid check-out fail.

That’s not enforcing meaningful consistency. That’s just treating all change as equal regardless of whether it affects the decision being made.

This kind of model introduces:

- False conflicts, where unrelated commands fail due to irrelevant changes

- Unnecessary retries, even if the business rule was still satisfied

- Coupling by identity, where logic becomes entangled just because it's routed through the same aggregate

It’s safe, but it’s also a bottleneck. But what's the solution? Shift the boundary.

Command Context Consistency

Instead of versioning the whole aggregate, we enforce consistency relative to the event context a command actually uses to make its decision.

Each command defines a filter: a combination of event types and optionally payload criteria that describe the subset of events it cares about. This filter forms the command context. When the command is run, it queries the store for all matching events and reads the highest sequence number (maxSequenceNumber) found.

This maxSequenceNumber is a version of the context, rather than of the entire entity.

When the command emits new events, it includes the same filter and the maxSequenceNumber observed. The append will only succeed if the number hasn't changed, meaning that no relevant events have been added in the meantime.

What’s different here?

- If something changed outside the command’s context, the command proceeds.

- If something changed within the context, the append fails, and the command must be retried using a fresh query and decision.

This aligns consistency with causality:

- You don’t block unrelated operations.

- You only reject commands that might now produce a different result based on new facts.

This is especially powerful in systems with broad event types and many loosely coupled rules. Instead of centralizing everything into one object, you let each decision stand on its own, based on the facts it actually needs.

The result is leaner logic, fewer conflicts, and a clearer connection between what a command reads and what it protects.

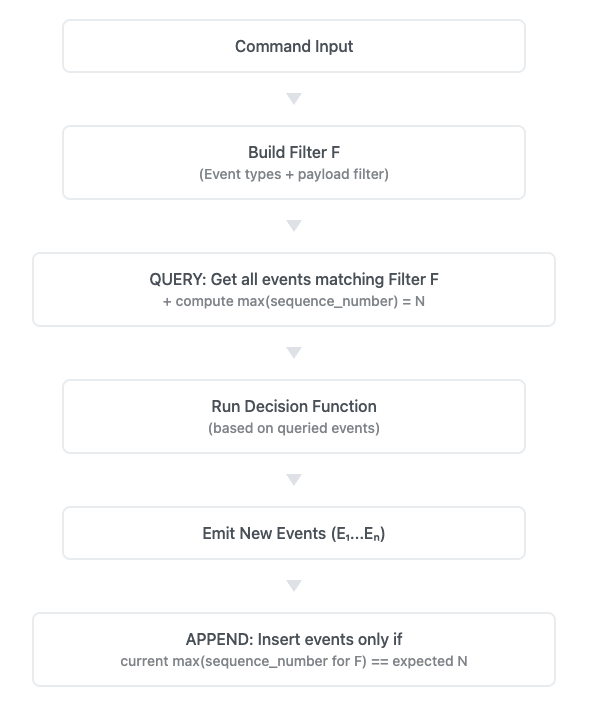

The Core Algorithm: Query + Append with Context Check

At the heart of this model is a simple two-step process:

- Query the event store using a command-specific filter

- Append new events only if the highest sequence number of the context hasn’t changed

This is the core idea behind command context consistency: the command operates within a filtered view of the event log, and appends are allowed only if that view remains unchanged between reading and writing.

The Interface

Our EventStore interface reflects this pattern directly:

export interface EventStore {

query(filter: EventFilter): Promise<QueryResult>;

append(

events: Event[],

filter?: EventFilter,

expectedMaxSequenceNumber?: number

): Promise<void>;

}

- query() loads all events matching a given EventFilter and returns both the events and the highest sequence number of the query context (maxSequenceNumber).

- append() writes new events, but only if the maxSequenceNumber for the same filter still matches the expected value.

This gives you causal protection per command.

The Contract

Let’s make this explicit:

- A command queries the event store using a filter F

- It receives a QueryResult, which includes a set of relevant past events and their maxSequenceNumber, which is N.

- The decision function runs based on those events and returns a list of new events.

- When appending, we re-apply the same filter F, check whether the current maxSequenceNumber is still N , append only if the condition holds.

If the maxSequenceNumber has changed, the context is no longer valid. Something relevant has happened since the decision was made. The append is aborted and the command must be retried, starting with a new query and decision.

This guarantees that every decision is made against the most recent context without assuming full control over an entire aggregate.

A Concrete Example: Check-Out Inventory Without False Conflicts

Imagine we’re developing a basic warehouse system. Each item can be checked in or out, renamed, or audited. All of these actions generate events that are stored in a single events table.

Now consider the following command: CheckOutInventory.

This command:

- Reduces stock for an item

- Fails if not enough stock is available

To make this decision, it needs a very specific context:

- Past InventoryCheckedIn events

- Past InventoryCheckedOut events

- Possibly InventoryAudited events (if used to correct quantity)

The Query

We use a filter like:

{

eventTypes: ['InventoryCheckedIn', 'InventoryCheckedOut', 'InventoryAudited'],

payloadPredicates: [{ itemId: 'abc-123' }]

}

The event store returns all matching events and the highest sequence number in that set, which is 1842.

The decision is made based on this context. If it passes, we emit:

[

{

eventType: 'InventoryCheckedOut',

payload: { itemId: 'abc-123', quantity: 2 }

}

]

We then append the new event using the same filter and the expected maxSequenceNumber (1842). Now, here’s the key part:

Valid Case: Non-conflicting update

The item was renamed (ItemRenamed event) at sequence number 1843.

This does not affect the outcome of the CheckOutInventory process. The context we care about remains unchanged. Append proceeds successfully.

Invalid Case: Conflicting update

In the meantime, someone checked out five units (InventoryCheckedOut at sequence number 1843). The relevant context has changed. Append is aborted. The command must re-query and re-evaluate to produce a different result, if necessary.

This is more than just an optimization. It’s a more precise model of causality.

Only relevant changes result in retries. Commands protect the facts they are concerned with. There are no global locks or false conflicts.

PostgreSQL Implementation

To enforce this context-local consistency at the database level, we use a single atomic SQL statement that both:

- Re-evaluates the current max(sequence_number) of the context

- Appends new events only if the context has not changed

The append Operation

Here’s how the core append logic works:

await eventStore.append(events, filter, expectedMaxSequenceNumber);

- events: The new events that persist after the decision function has run.

- filter: The same event filter that defined the context of the command.

- expectedMaxSequenceNumber: The value observed during the query phase.

This combination ensures only relevant changes trigger a retry.

How It’s Enforced in SQL

We generate a query like this:

export function buildCteInsertQuery(filter: EventFilter, expectedMaxSeq: number): { sql: string, params: unknown[] } {

const contextVersionQueryConditions = buildContextVersionQuery(filter);

const contextParamCount = contextVersionQueryConditions.params.length;

const eventTypesParam = contextParamCount + 1;

const payloadsParam = contextParamCount + 2;

return {

sql: `

WITH context AS (

SELECT MAX(sequence_number) AS max_seq

FROM events

WHERE ${contextVersionQueryConditions.sql}

)

INSERT INTO events (event_type, payload)

SELECT unnest($${eventTypesParam}::text[]), unnest($${payloadsParam}::jsonb[])

FROM context

WHERE COALESCE(max_seq, 0) = ${expectedMaxSeq}

RETURNING *;

`,

params: contextVersionQueryConditions.params

};

}

The context CTE (Common Table Expression) uses the same event filter as during the decision phase, matching event types and optional payload predicates. It returns the maximum sequence number found in that context.

The INSERT only proceeds if this value matches the expectedMaxSequenceNumber that was passed in. If not, no events are appended. The operation fails silently and the caller is aware that the context has changed.

From there, the command can simply be retried: re-queried and re-evaluated, with another attempt to append being made.

This approach has several advantages:

- Atomicity: the check and insert happen in a single query.

- Efficiency: no locks or serializable isolation are required.

- Precision: only relevant changes cause a conflict.

- Concurrency safety: multiple commands can operate in parallel with different filters without interfering with each other.

Implementation and Reference Code

The approach described here is fully implemented in the PostgreSQL-backed event store of the EventStore NPM package.

It’s part of an ongoing collaboration with Ralf Westphal, focused on building practical tools for Aggregateless Event Sourcing (AES) with Command Context Consistency (CCC).

The code is open source and includes:

- A minimal EventStore interface with clear semantics

- A PostgreSQL adapter that enforces context-local consistency via SQL

- Utilities to build filters, queries, and safe conditional inserts

- Examples of command-side usage and basic projections

You can explore the repository and usage examples here:

It's not a framework. There are no hidden conventions. Just a thin, explicit layer over PostgreSQL that helps you model decisions with precision without aggregates, and without unnecessary complexity.

Cheers!